Incremental loading using the Lookup Transformation in SQL Server Integration Services (SSIS) offers several benefits in the context of data warehousing and ETL (Extract, Transform, Load) processes:

Efficiency: Incremental loading allows you to update only the modified or new records in your database, reducing the processing time and resource consumption. Using the Lookup Transformation, you can identify changed or new records efficiently.

Faster Processing: By processing only the changed data, your ETL processes run faster, leading to quicker updates and shorter downtimes for your systems.

Reduced Data Movement: Instead of transferring the entire dataset, incremental loading moves only the necessary data, reducing the volume of data transferred between source and destination systems.

Lower Resource Usage: Incremental loading minimizes the use of system resources such as CPU, memory, and network bandwidth since it processes a smaller subset of data compared to a full load.

Real-time or Near Real-time Updates: For systems requiring real-time or near real-time data updates, incremental loading ensures that the most recent changes are quickly reflected in the data warehouse.

Historical Data Preservation: Incremental loading methods often include mechanisms to handle historical data, allowing you to keep track of changes over time for analytical purposes.

Minimized Impact on Source Systems: By extracting only new or modified records, incremental loading reduces the load on the source systems, ensuring that they can continue functioning smoothly even during ETL processes.

Scalability: Incremental loading strategies, when implemented properly, are scalable. They can handle large volumes of data efficiently, accommodating the growth of data in your organization.

Version Control: Incremental loading allows you to maintain version control, ensuring that you can track changes and revert to previous states if necessary.

In summary, incremental loading using the Lookup Transformation in SSIS provides a more efficient, faster, and resource-friendly way to keep your data warehouse up-to-date, making it a crucial technique in data integration and business intelligence scenarios.

There is a sample that explains how the SSIS package is set up, designed, and executed. The data is dummy data generated by AI.

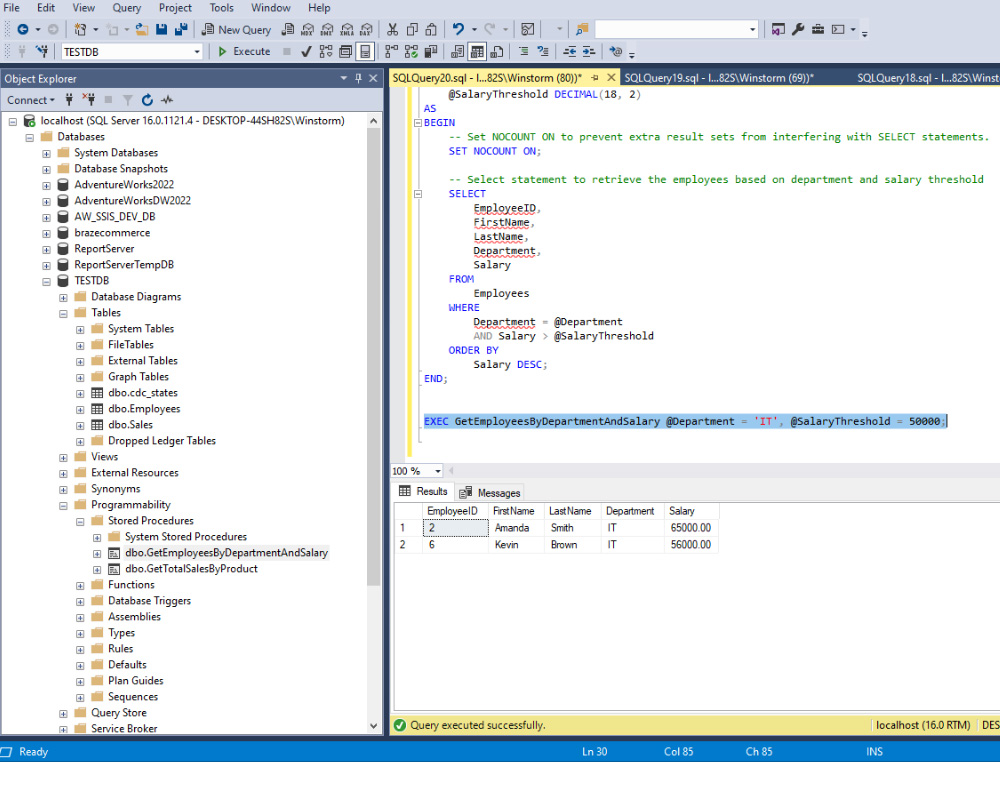

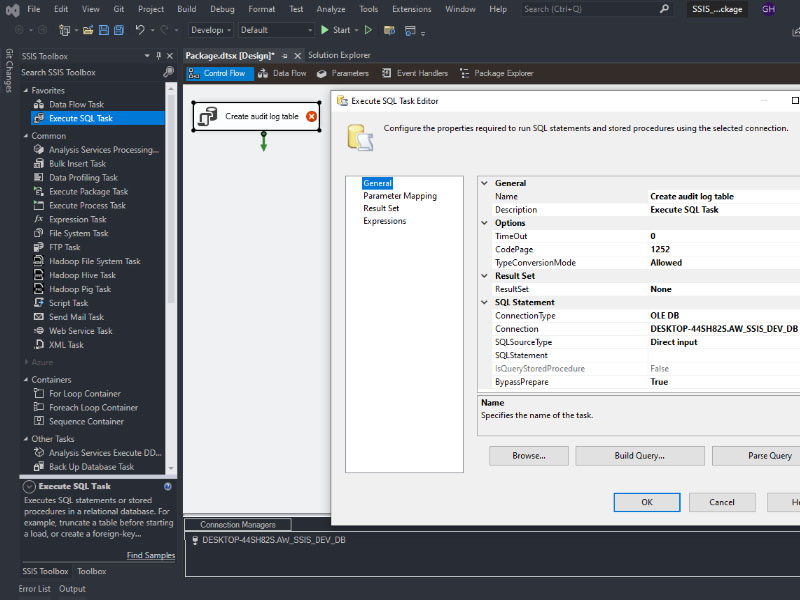

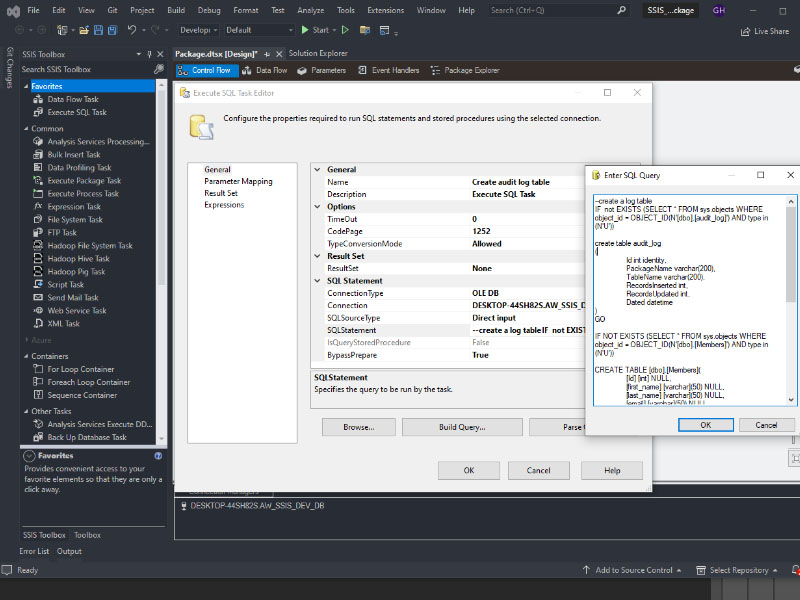

For example, start by selecting the ‘Execute SQL Task’ component on the Control Flow panel and edit the name for this task.

For example, in the “Execute SQL Task Editor”, enter the SQL statement in the “Enter SQL Query” window

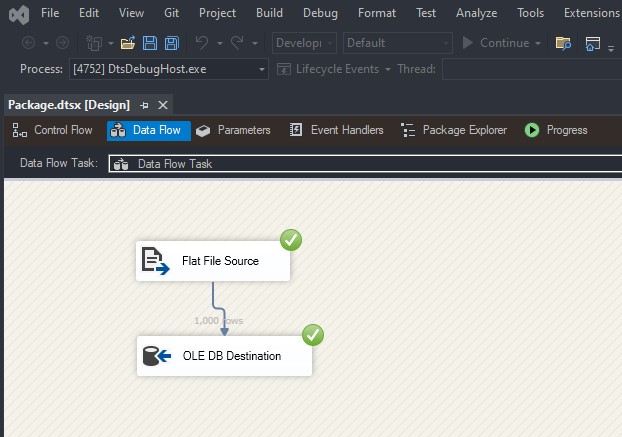

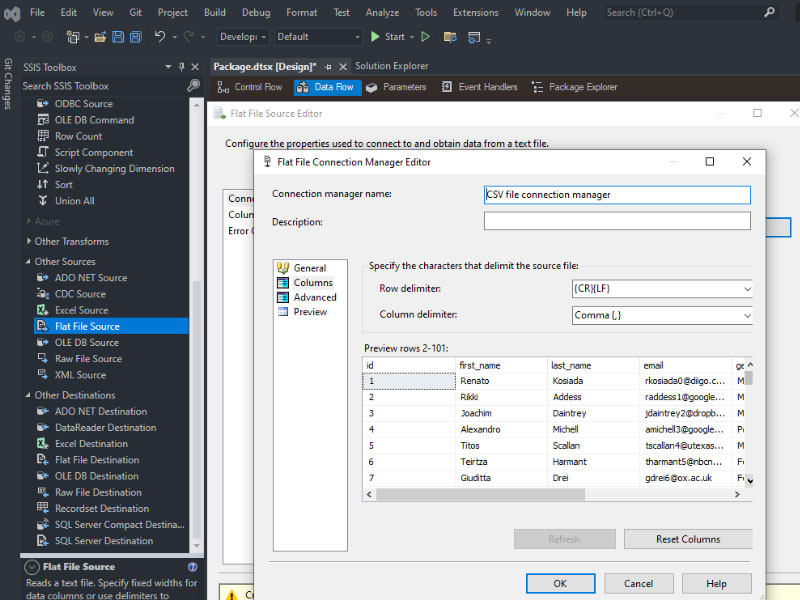

For example, select the ‘Flat File Source’ component on the ‘Data Flow’ panel. In the ‘Flat File Connection Manager Editor’, you can choose a file location for loading a specific flat file, such as a CSV file.

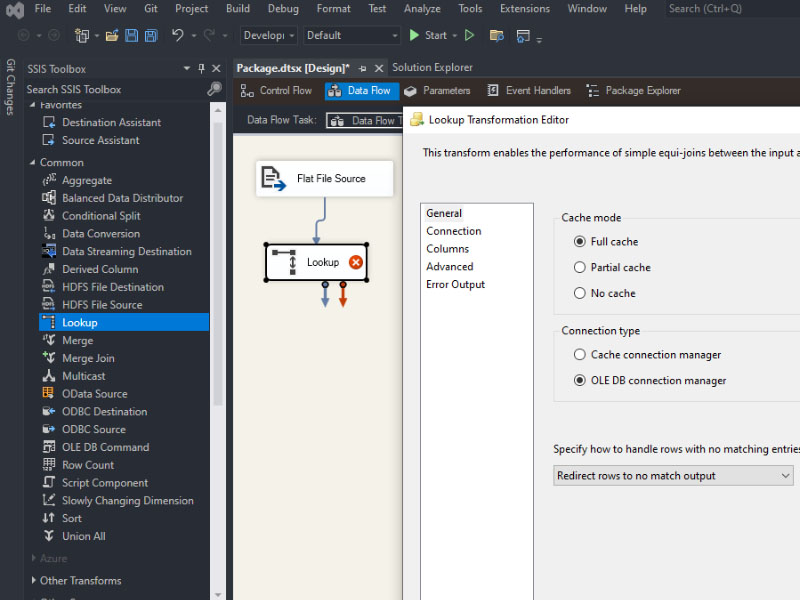

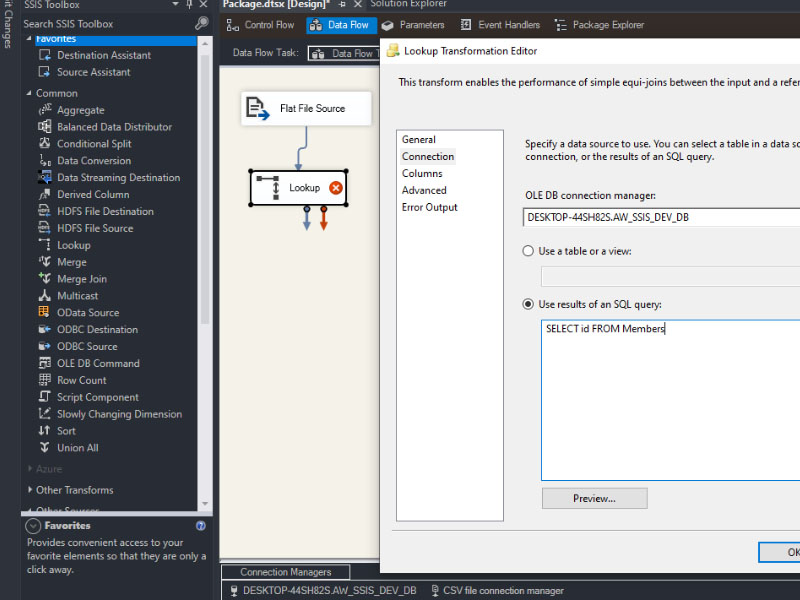

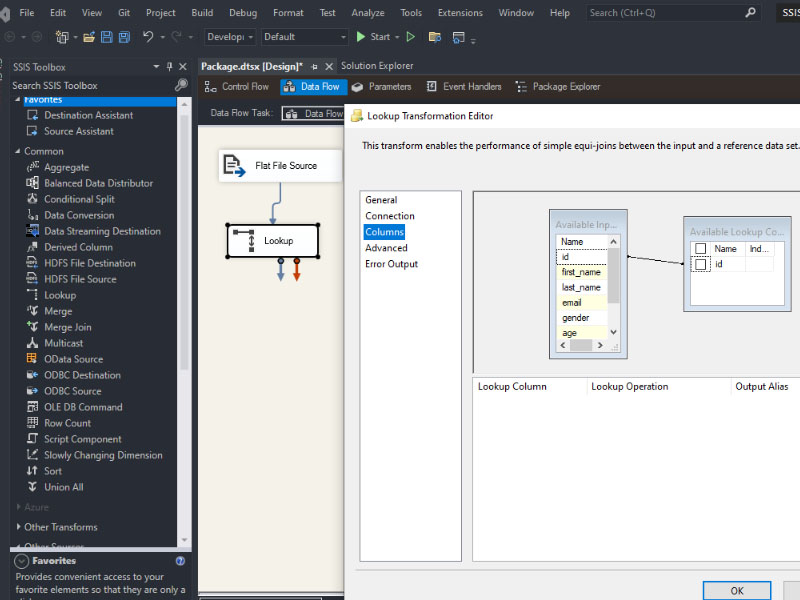

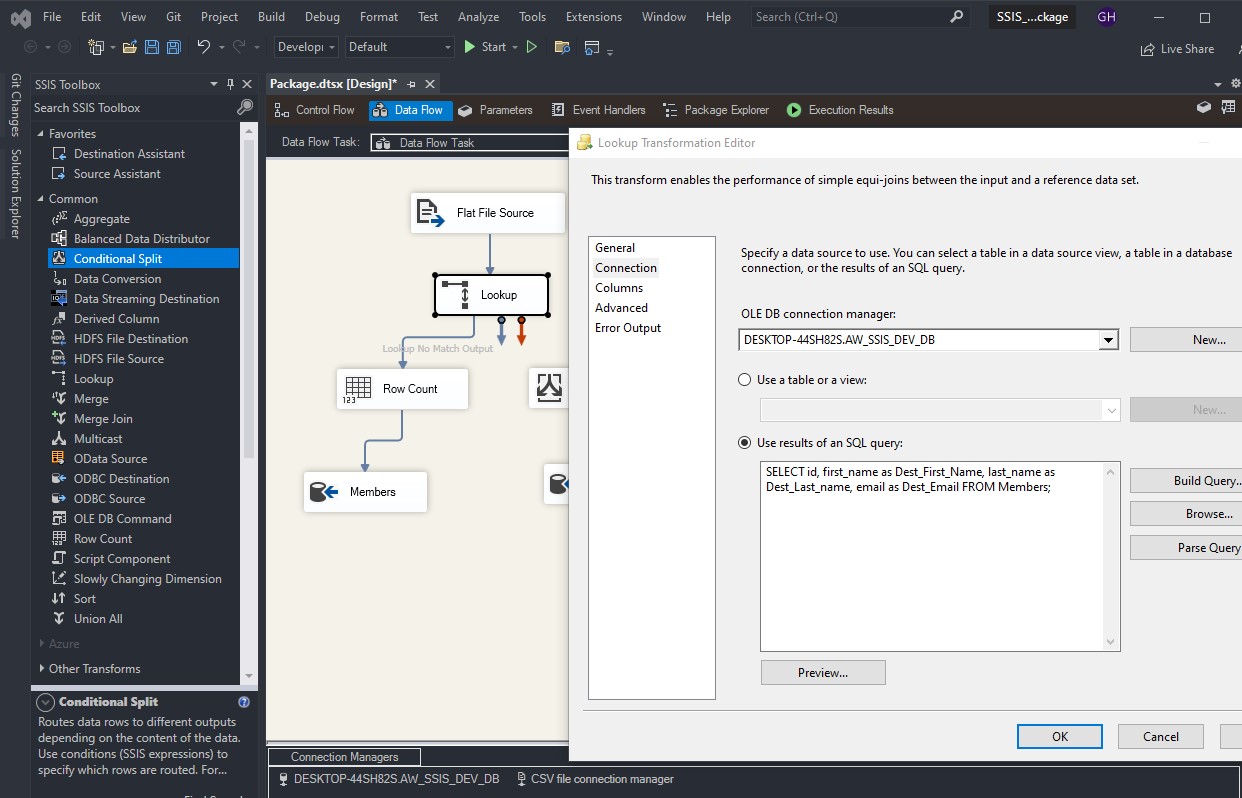

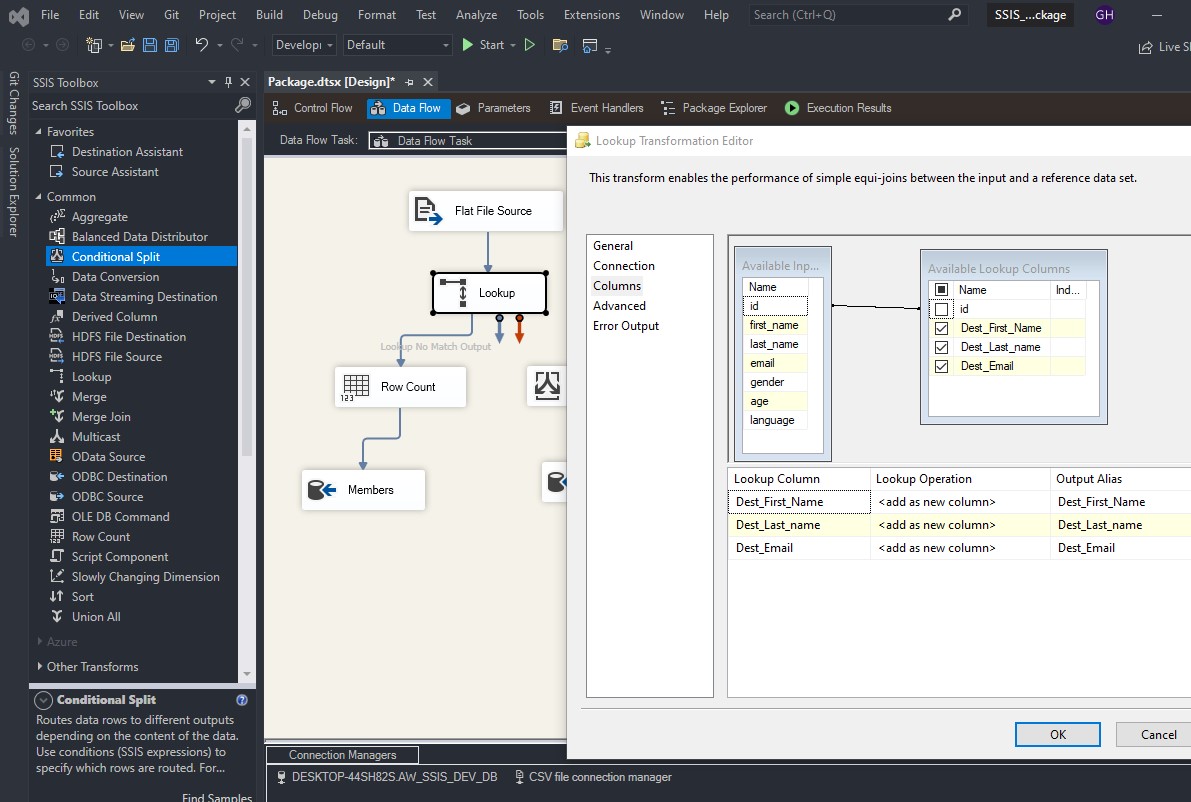

For example, select the ‘Lookup’ component on the ‘Data Flow’ panel. In the ‘Lookup Transformation Editor’, you can choose a database name and location, and then use the results of an SQL query to perform the lookup operation.

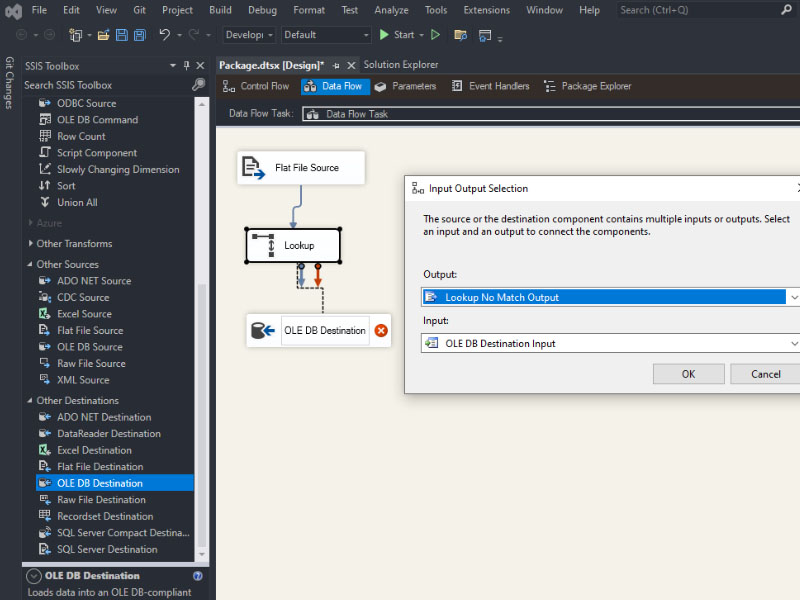

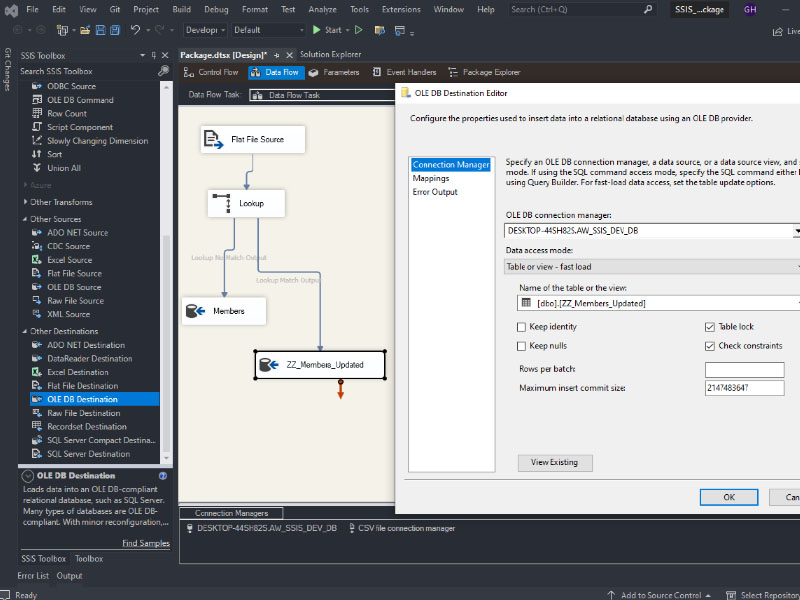

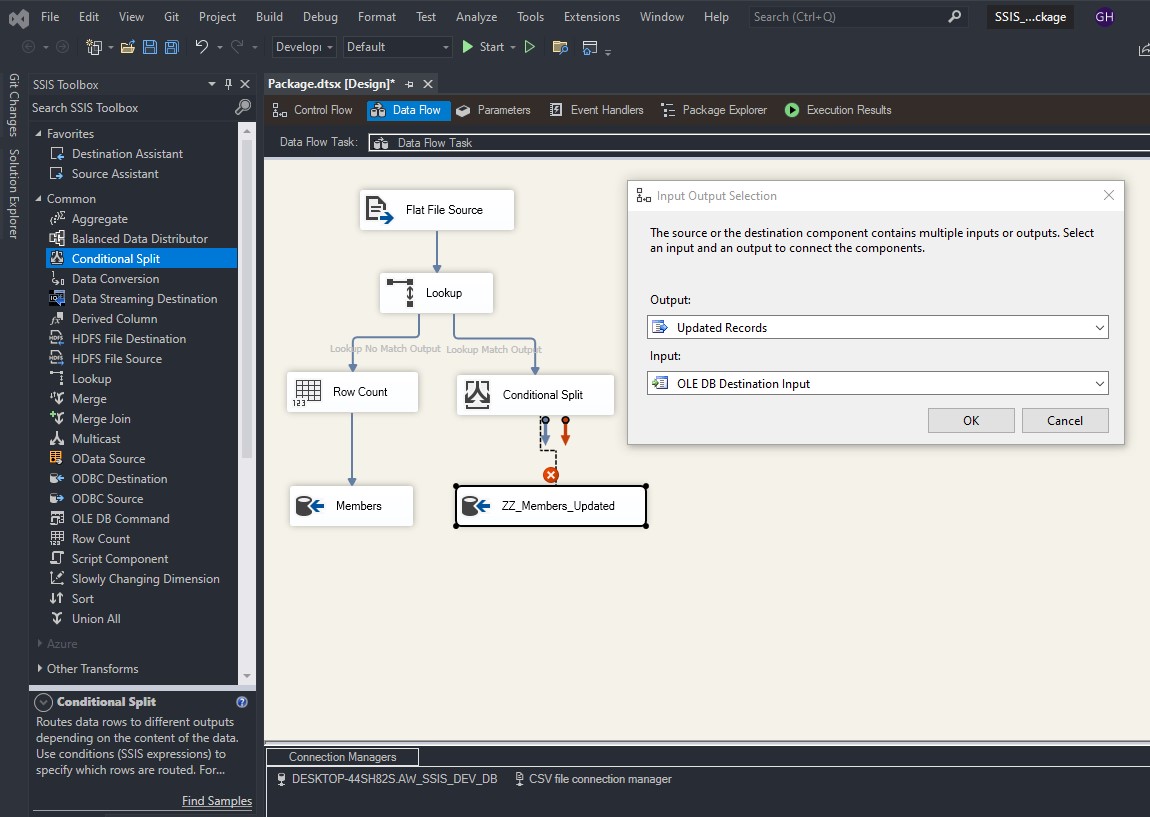

For example, select the ‘OLE DB Destination’ component on the ‘Data Flow’ panel. In the ‘Input Output Selection’ window, you can choose output and input options.

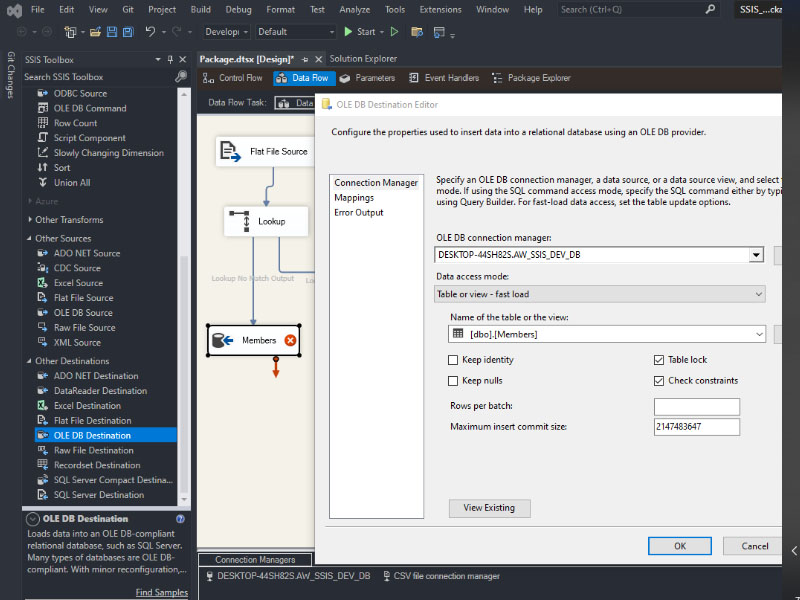

Rename the ‘OLE DB Destination’ component on the ‘Data Flow’ panel. For example, the name can be a table name where you want the data stored. In the ‘OLE DB Destination Editor’, you can choose database and table options.

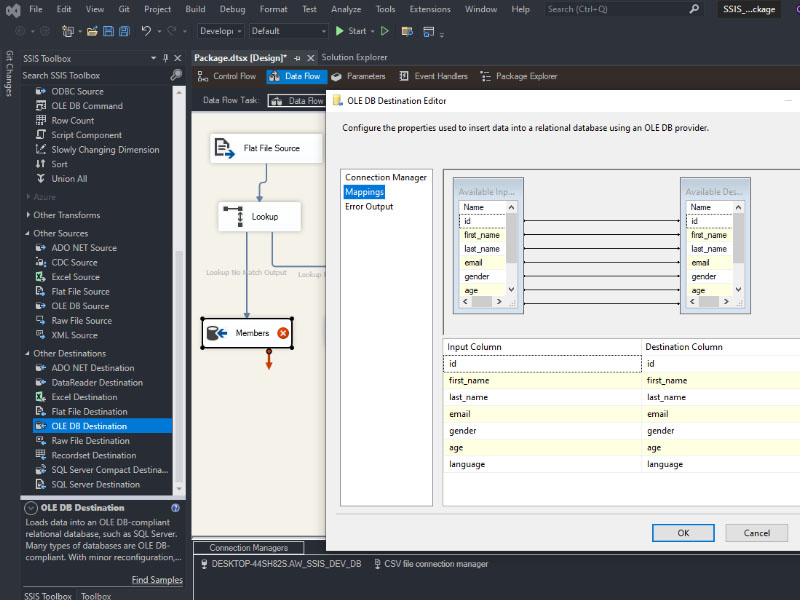

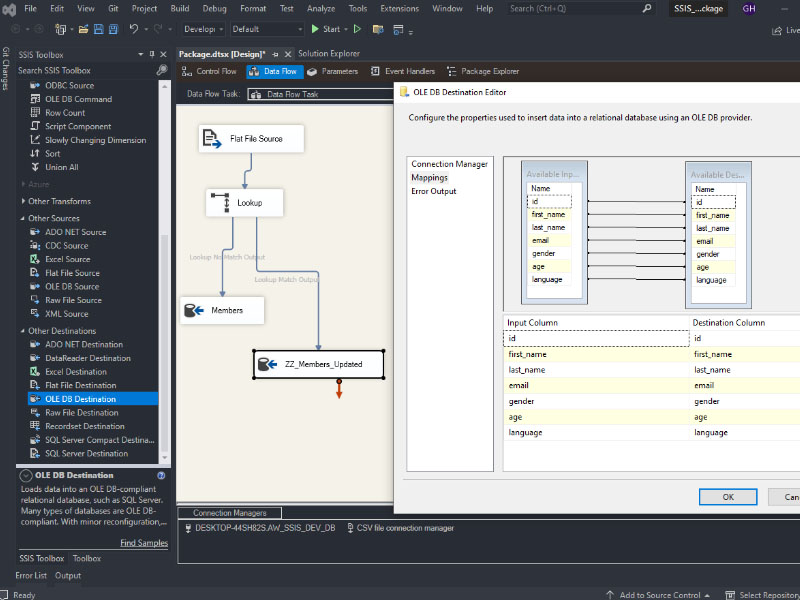

In the ‘OLE DB Destination Editor,’ in the ‘Mapping’ area, you can see the names of columns to map to specific tables in the database.

For example, select the ‘OLE DB Destination’ component and rename it ‘ZZ_Members_Updated,’ which stores changed data. In the ‘OLE DB Destination Editor,’ you have to select a specific table to store the updated data.

The ‘OLE DB Destination Editor,’ shows the data for mapping the columns of the table.

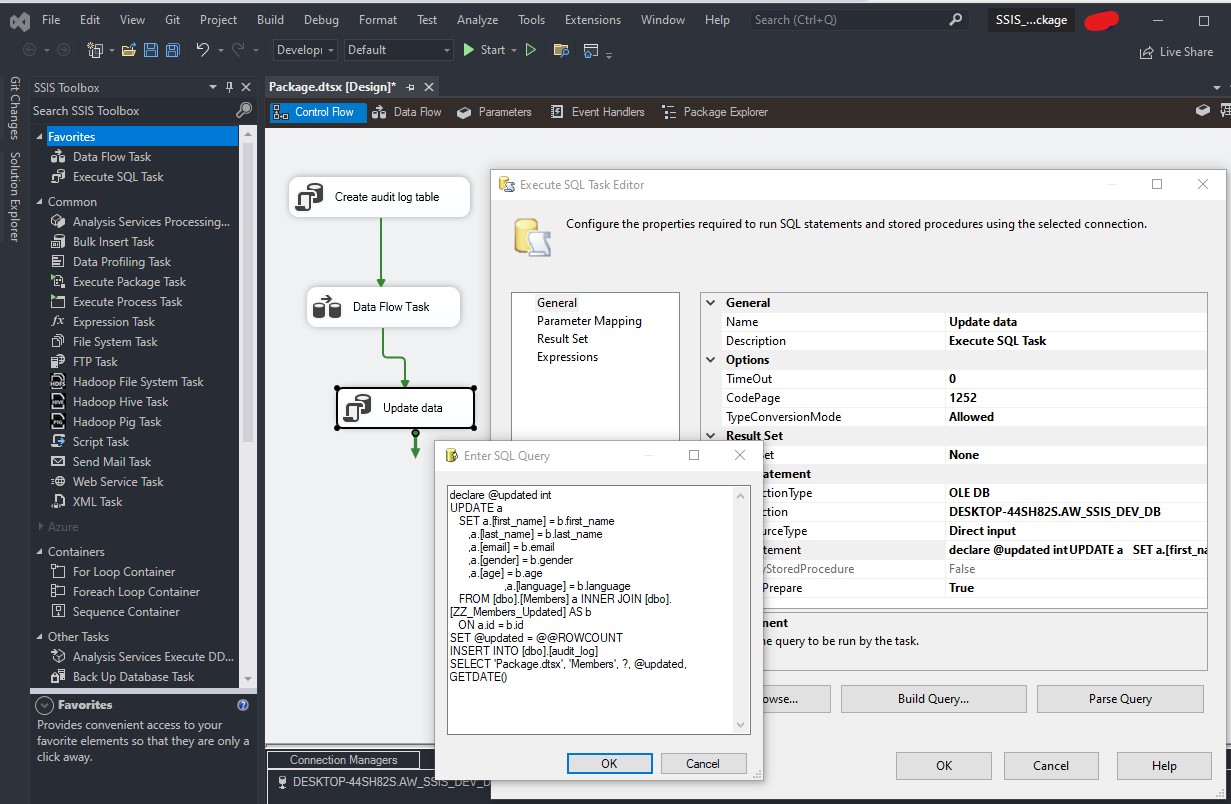

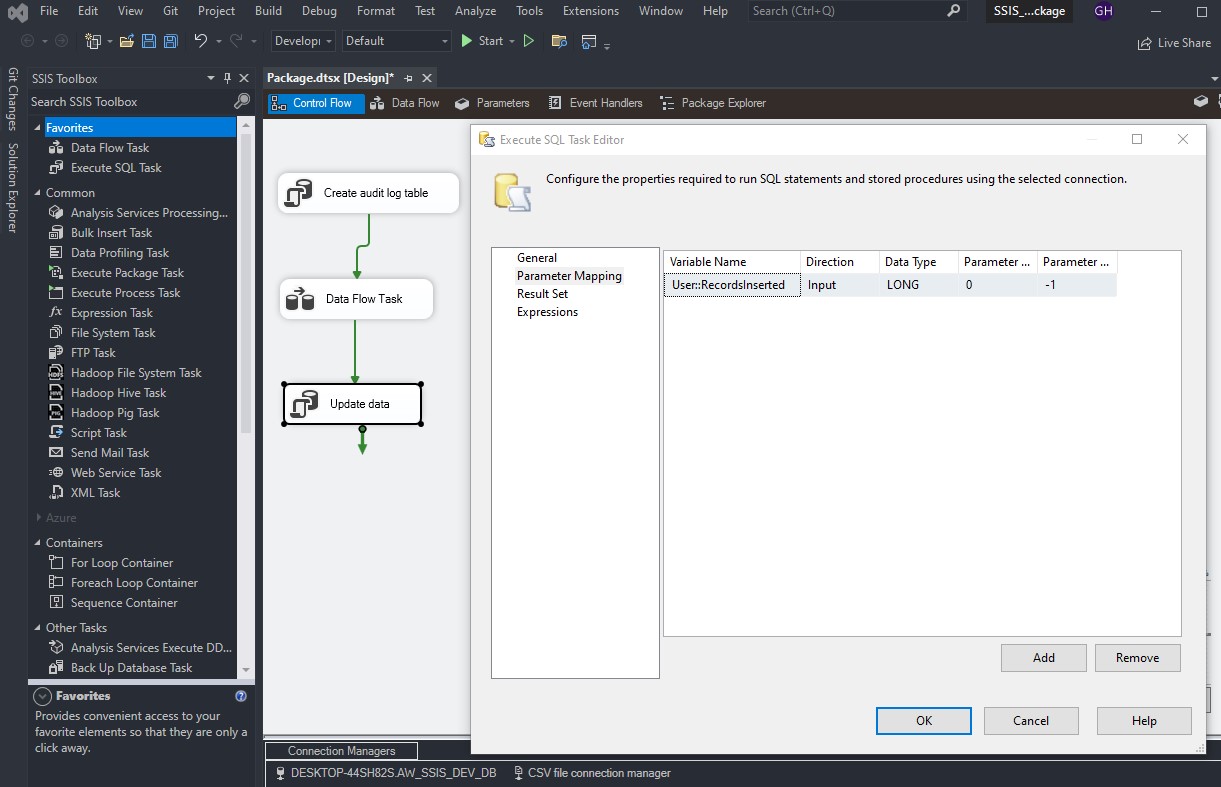

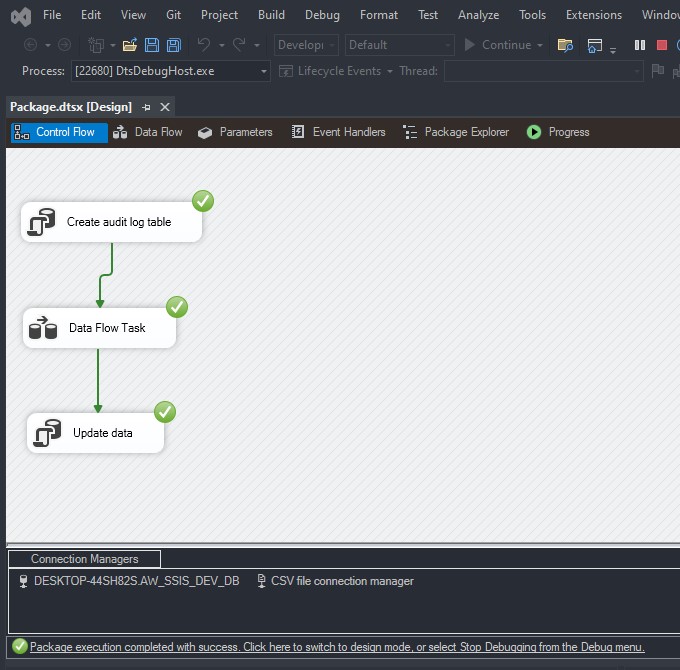

Add an ‘Execute SQL Task’ and rename it to ‘Update Data’. In the ‘Execute SQL Task Editor’, enter the SQL statement to update the data. And need to connect to the database.

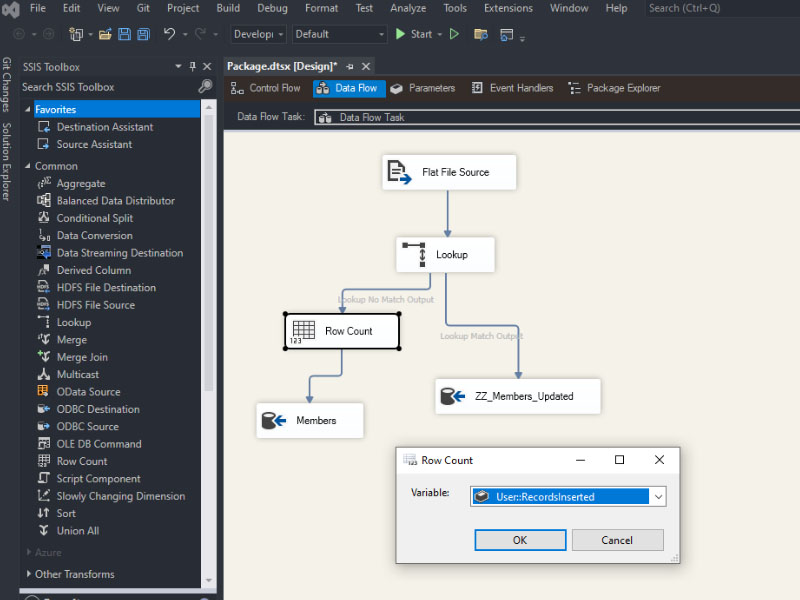

Add the ‘Row Count’ component and assign a variable for this row count.

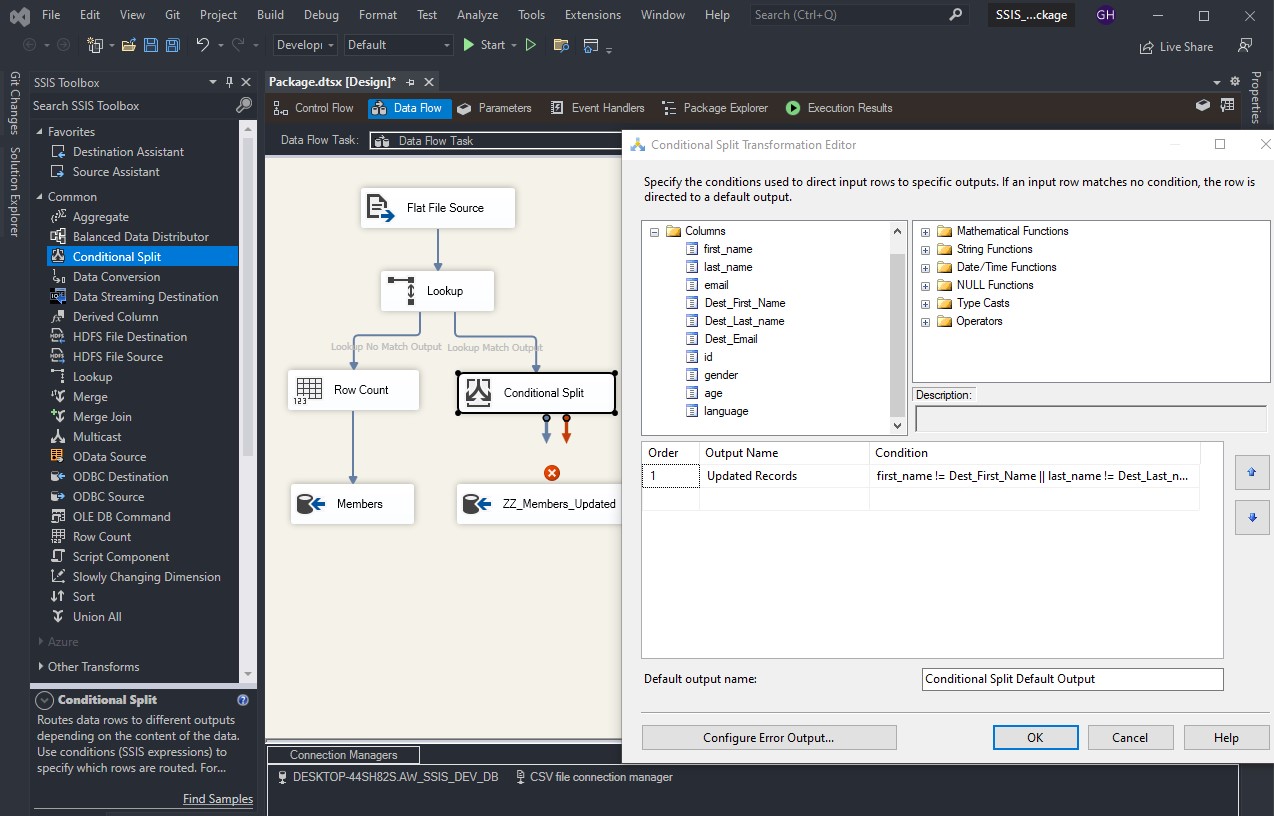

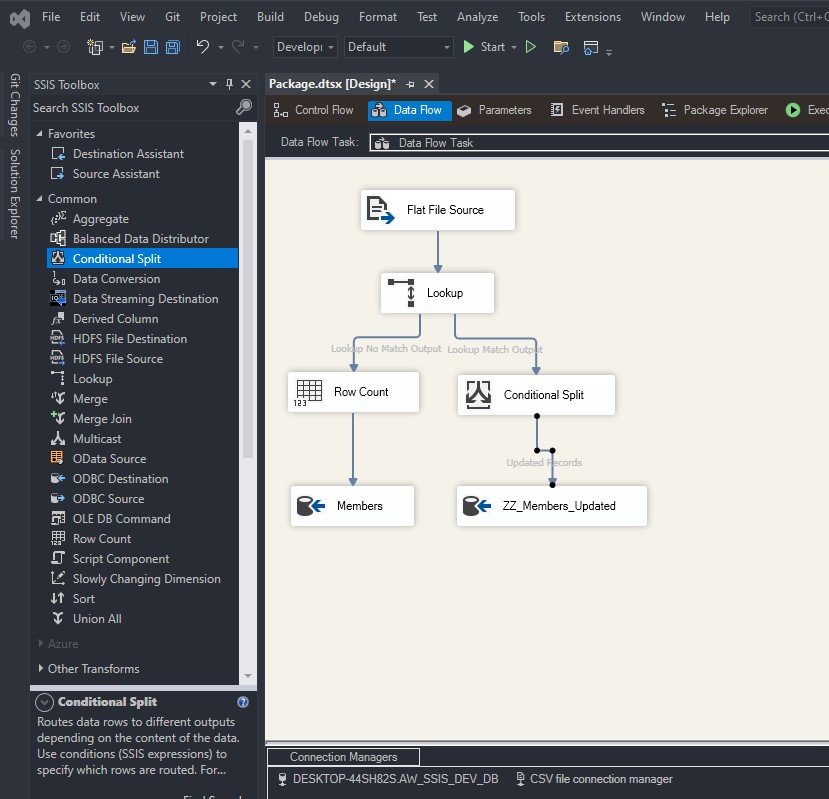

Add the ‘Conditional Split’ component, select a condition from the ‘Conditional Split Transformation Editor,’ and assign an output name.

When the Conditional Split is connected to ‘ZZ_members_Updated’, you have to choose the output and input options.

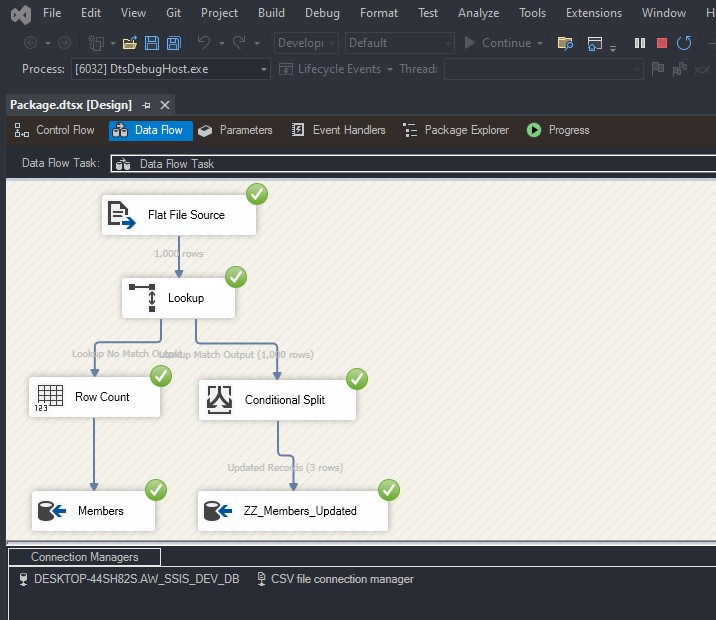

Set up all components, then start running the package.

In the example, demonstrated from the Flat File Source, walk through all components successfully.

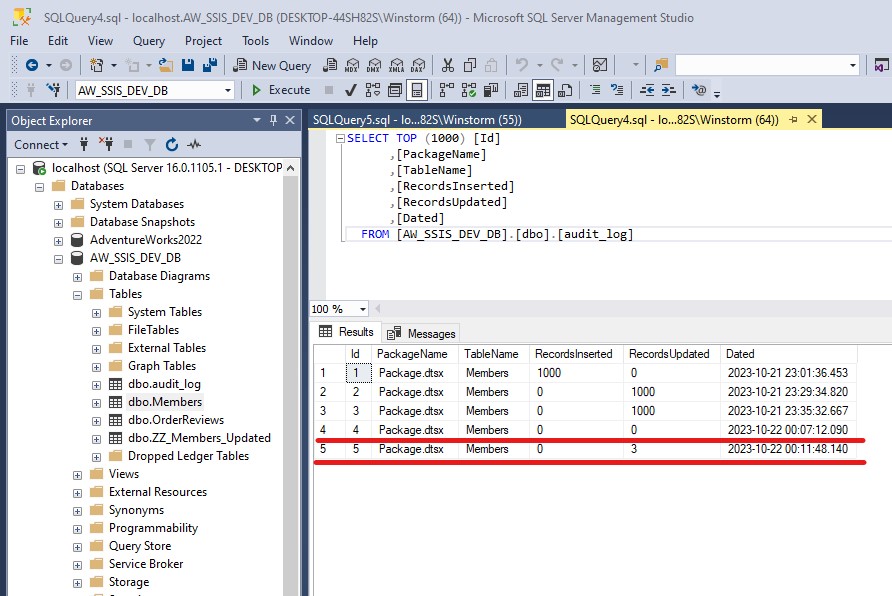

From the SQL server, we can see that the table ‘audit_log’ records have been updated; 3 rows were modified.

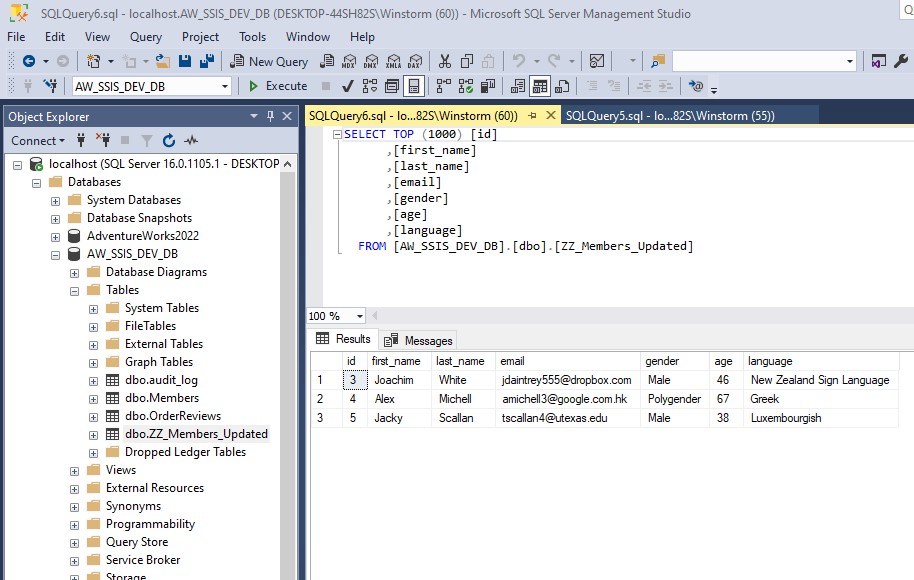

The table ‘ZZ_Members_Updated’ shows which 3 records have been modified.

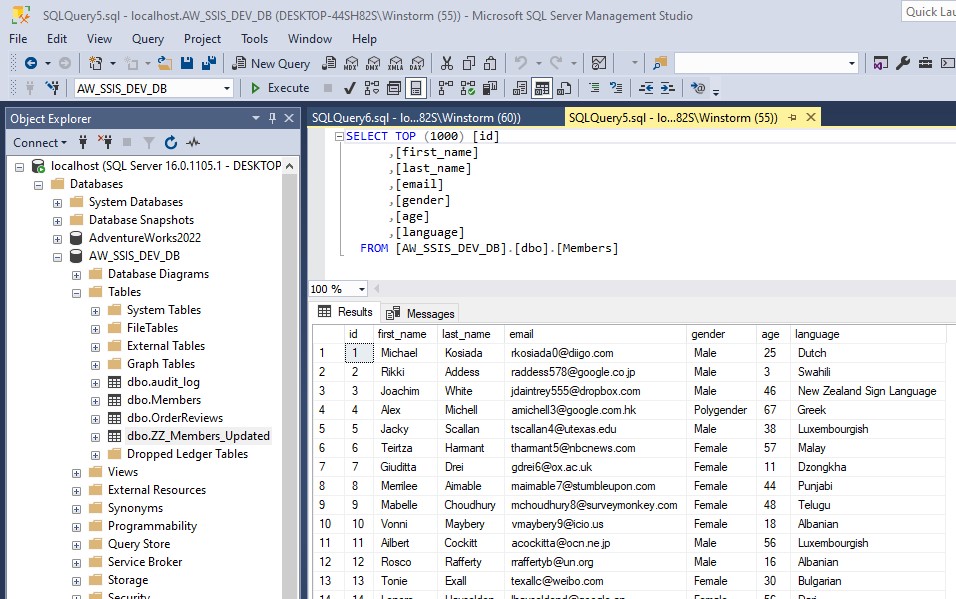

It shows the data in the ‘Members’ table after being updated.

Data Source: Generate dummy data by AI

Trademark Disclaimer:

All trademarks, logos, and brand names are the property of their respective owners. All company, product, and service names used in this website are for identification purposes only. Use of these names trademarks, and brands do not imply endorsement.